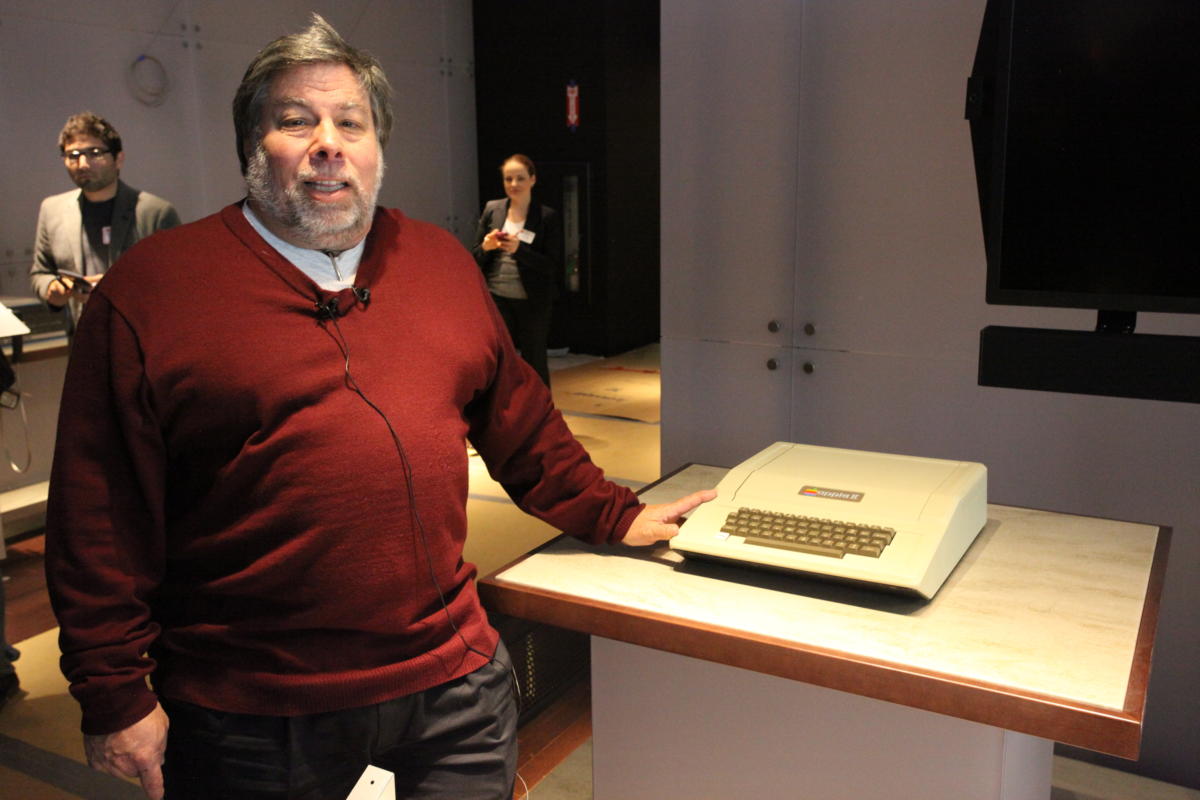

Apple co-founder Steve Wozniak has been touring the media to discuss the perils of generative artificial intelligence (AI), warning people to be wary of its negative impacts. Speaking to both the BBC and Fox News, he stressed that AI can misuse personal data, and raised concerns it could help scammers generate even more effective scams, from identity fraud to phishing to cracking passwords and beyond.

AI puts a spammer in the works

“We're getting hit with so much spam, things trying to take over our accounts and our passwords, trying to trick us into them,” he said.

(This is a concern also raised by Federal Trade Commission (FTC) chairperson, Kina Khan.)

Wozniak voiced concern that technologies such as ChatGPT could be used to build really convincing fraudulent emails, for example. While he’s ambivalent in the sense he can see the advantages the tech can provide, he also fears that misuse of these powerful tools can become a real threat in the hands of bad people.

“AI is so intelligent it’s open to the bad players, the ones that want to trick you about who they are,” he warned.

A danger to business

Security researchers are equally worried. In business, 53% of decision makers believe AI will help attackers build more convincing email scams. Most recently, BlackBerry warned that a successful attack credited to ChatGPT will emerge within the next 12 months.

Wozniak was a signatory to an open letter signed by over 1,000 tech luminaries warning of the need to go slow on AI development. That letter asked AI labs to pause training on systems more powerful than GPT-4 for at least 6 months.

Even so, Wozniak also warned against making a knee jerk reaction to implied fear: “We should really be objective,” he said on the Fox News show, Your World with Neil Cavuto. “Where are the danger points?”

The petition warned against some of these points, not least that, “Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: 'Should we let machines flood our information channels with propaganda and untruth?'”

We’ve also seen cases in which the use of Generative AI models has led to the disclosure of business secrets, prompting security warnings from intelligence agencies.

An existential threat?

Woz’s view is no solitary warning. Geoffrey Hinton, the so-called godfather of AI, has a similar opinion and recently quit working at Google so he could warn of the AI dangers. Hinton sees AI replacing human workers on a massive scale, warning the impact of this could threaten stability and potentially lead to extinction.

Like others in tech, Wozniak argues that industry and government should identify the danger points and act now to minimize the risks.

Pointing to what we now know concerning the risks of social media, he said, “What if we had just sat down and thought about it before the social Web came,” and created frameworks to help manage and minimize those risks.

Governments move slowly

Many governments are moving to figure out if AI tools need to be regulated, and how, but that process is unlikely to keep pace with AI evolution. The Biden administration recently announced a voluntary set of AI rules intended to deal with safety and privacy and to initiate discussion on the real and existential threat of AI technologies, including ChatGPT.

“AI is one of the most powerful technologies of our time, but in order to seize the opportunities it presents, we must first mitigate its risks,” the White House said in statement. “President Biden has been clear that when it comes to AI, we must place people and communities at the center by supporting responsible innovation that serves the public good, while protecting our society, security, and economy.”

In the UK, the Competition and Markets Authority (CMA) is reviewing the market for AI. “Our goal is to help this new, rapidly scaling technology develop in ways that ensure open, competitive markets and effective consumer protection,” said Sarah Cardell, CMA's chief executive.

The UK government, however, seems keen on a hands-off approach to use of this technology.

A reaction to the action

These efforts face a counter reaction among some in tech, who argue that slowing AI evolution in some nations could give others the chance to get ahead in application of the tech. Microsoft co-founder Bill Gates is one of those making this argument.

Hinton recently said his “one hope” regarding AI: that competing governments can agree to reign in its use given all parties face an existential threat. “We’re all in the same boat with respect to the existential threat so we all ought to be able to cooperate on trying to stop it,” he said.

We were warned

The tragedy, of course, is that the existential risks inherent in AI have been flagged for years. Back in 1863, novelist Samuel Butler warned: “The upshot is simply a question of time, but that the time will come when the machines will hold the real supremacy over the world and its inhabitants is what no person of a truly philosophic mind can for a moment question.”

The Apple co-founder doesn’t seem terribly optimistic that effective constraints around such use will be put in place. “The forces that drive for money compared to the forces that drive for caring about us, for love — forces that drive for money usually win out. It’s sort of sad,” he told BBC.

Please follow me on Mastodon, or join me in the AppleHolic’s bar & grill and Apple Discussions groups on MeWe.

"type" - Google News

May 10, 2023 at 06:27PM

https://ift.tt/4ZVXTlf

Steve Wozniak: ChatGPT-type tech may threaten us all - Computerworld

"type" - Google News

https://ift.tt/zekswNZ

https://ift.tt/fdybHPu

Bagikan Berita Ini

0 Response to "Steve Wozniak: ChatGPT-type tech may threaten us all - Computerworld"

Post a Comment